On April 16, 2025, OpenAI introduced two new AI reasoning models—o3 and o4-mini. These represent a significant jump in the company’s AI capabilities, which is perhaps nowhere more evident than with their new image reasoning capabilities.

These New Models Can “Think” With Images

OpenAI says these new models can interpret any image you upload, like a whiteboard sketch, textbook diagrams, or a graphic PDF. The release announcement for OpenAI o3 and o4-mini says:

They don’t just see an image—they think with it. This unlocks a new class of problem-solving that blends visual and textual reasoning, reflected in their state-of-the-art performance across multimodal benchmarks.

The image analysis is included in the chain of thought reasoning performed by the models. The AI models can zoom, rotate, or crop the images to improve their processing. And they are just as adept with low-quality images.

For instance, when solving a scientific problem involving a diagram, the model might zoom into a specific part of the image, run calculations with Python, and then generate a graph to explain its findings.

While reasoning, the o3 and o4-mini can dynamically use all available ChatGPT tools, including web browsing, Python code execution, and image generation. This agentic capability allows them to automatically use the ideal ChatGPT tool for a given task. Users and developers can execute multi-step workflows and tackle complicated tasks.

The o4-mini-high is a variant of o4-mini that spends more time and computational effort on each prompt to deliver higher-quality results. Some everyday scenarios could be:

- Generating and evaluating studies in biology, engineering, and other STEM fields, offering detailed step-by-step reasoning and visual explanations.

- Searching and collating information from multiple sources, such as online databases, financial reports, market data, and charts, to generate business insights.

The models have been trained through reinforcement learning (a key concept in AI). Now, they can handle fuzzier problems better as they can reason when to use a particular tool for a desired result.

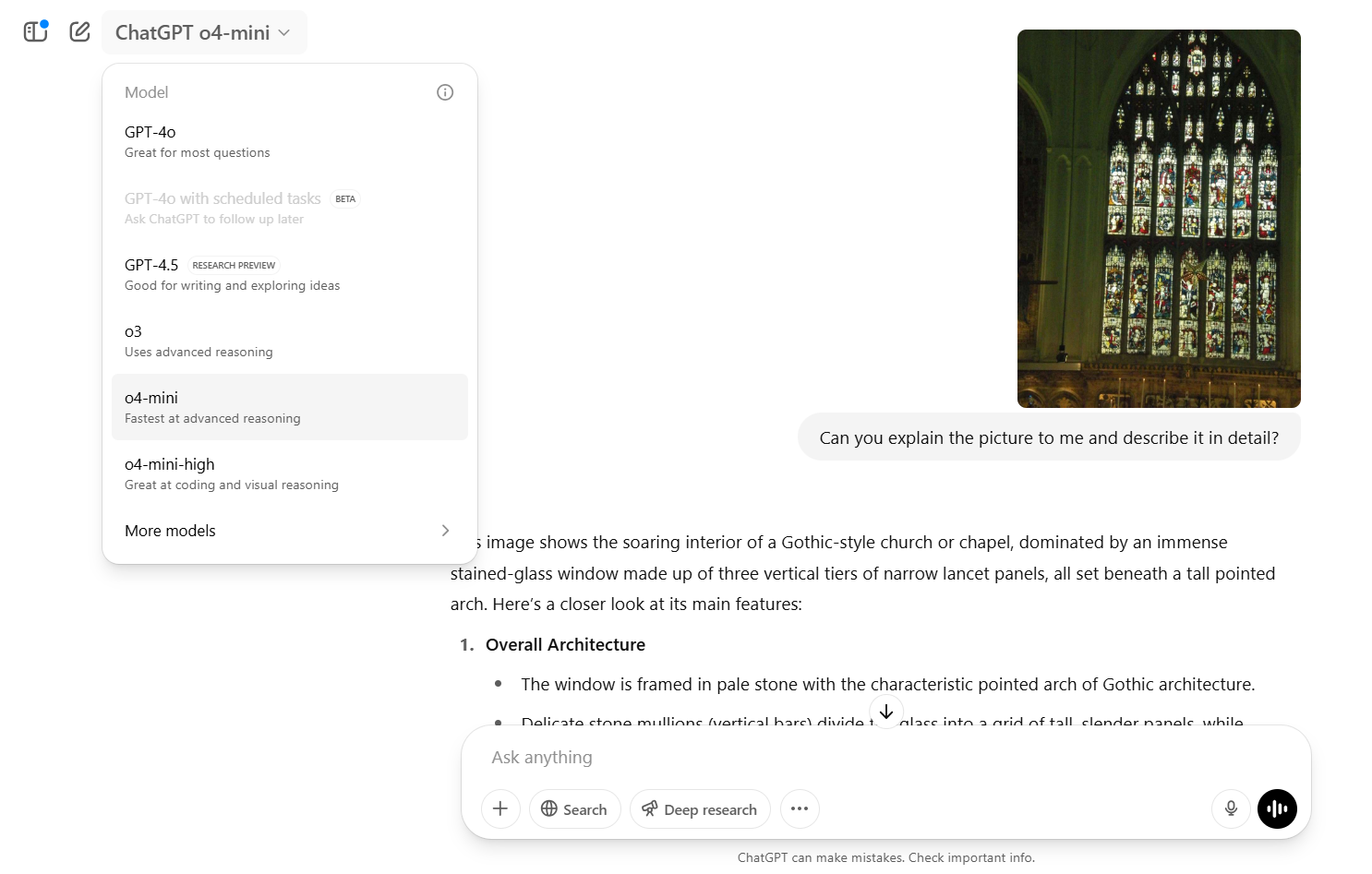

The o3, o4-mini, and o4-mini-high models are available to everyone with ChatGPT Plus, Pro, and Team accounts, with o3-pro expected to launch in the coming weeks. You can see them in the model selector menu.

Free users can experiment with the o4-mini model by choosing the Think option in the composer before submitting their requests.

Why ChatGPT’s Multimodal Capabilities Could Be Amazing

By enabling AI to “think with images,” OpenAI’s new models can tackle real-world problems that require interpreting both text and visuals. This includes debugging code from screenshots, reading handwritten text, analyzing scientific diagrams, or extracting insights from complex charts. The result? ChatGPT has become more context-aware.

The models are now more autonomous. They may also be more efficient, independently fitting a specific model to a task. As these autonomous AI agents can handle complex, multi-step tasks, their reasoning capabilities and visual intelligence make them critical for fields such as research, business, and creative work.

Leave a Comment

Your email address will not be published. Required fields are marked *