That innocent chat with Meta AI about your job, health, or legal problems? It might already be public. Meta tucked a dangerous setting into its app, and it’s leaking people’s personal lives into the feed.

Meta AI isn’t just an AI chatbot; it’s also a social feed. While you’re chatting with what feels like a private assistant, there’s a chance those conversations could be published to a public Discover tab, often complete with your username and profile photo.

While sharing isn’t automatic, it’s alarmingly easy to do by accident. A few taps can send your query into the public feed. Many users don’t even notice, especially when they’re just testing features or playing with image generation.

Related

I Tested Meta AI: It’s Better Than Other Chatbots In These 3 Areas

Meta AI doesn’t have the same following as ChatGPT or Claude, but it’s surprisingly good in a few areas.

It’s already led to some serious oversharing. As per TechCrunch, one user asked for help writing a character reference letter for an employee facing legal trouble, using the employee’s full name. Others have posted prompts about tax evasion, medical conditions, or court issues, seemingly unaware that they were publishing sensitive details to a public timeline.

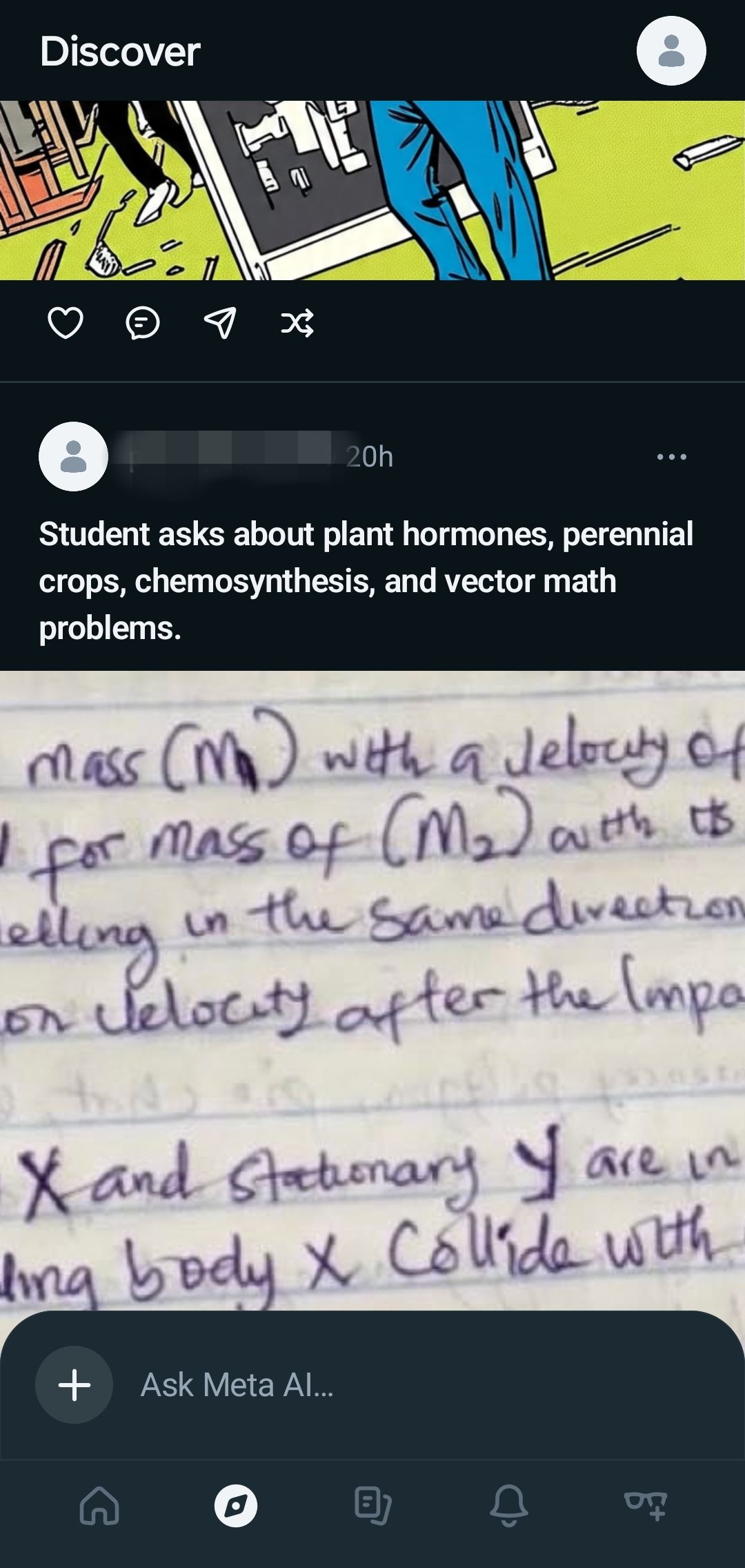

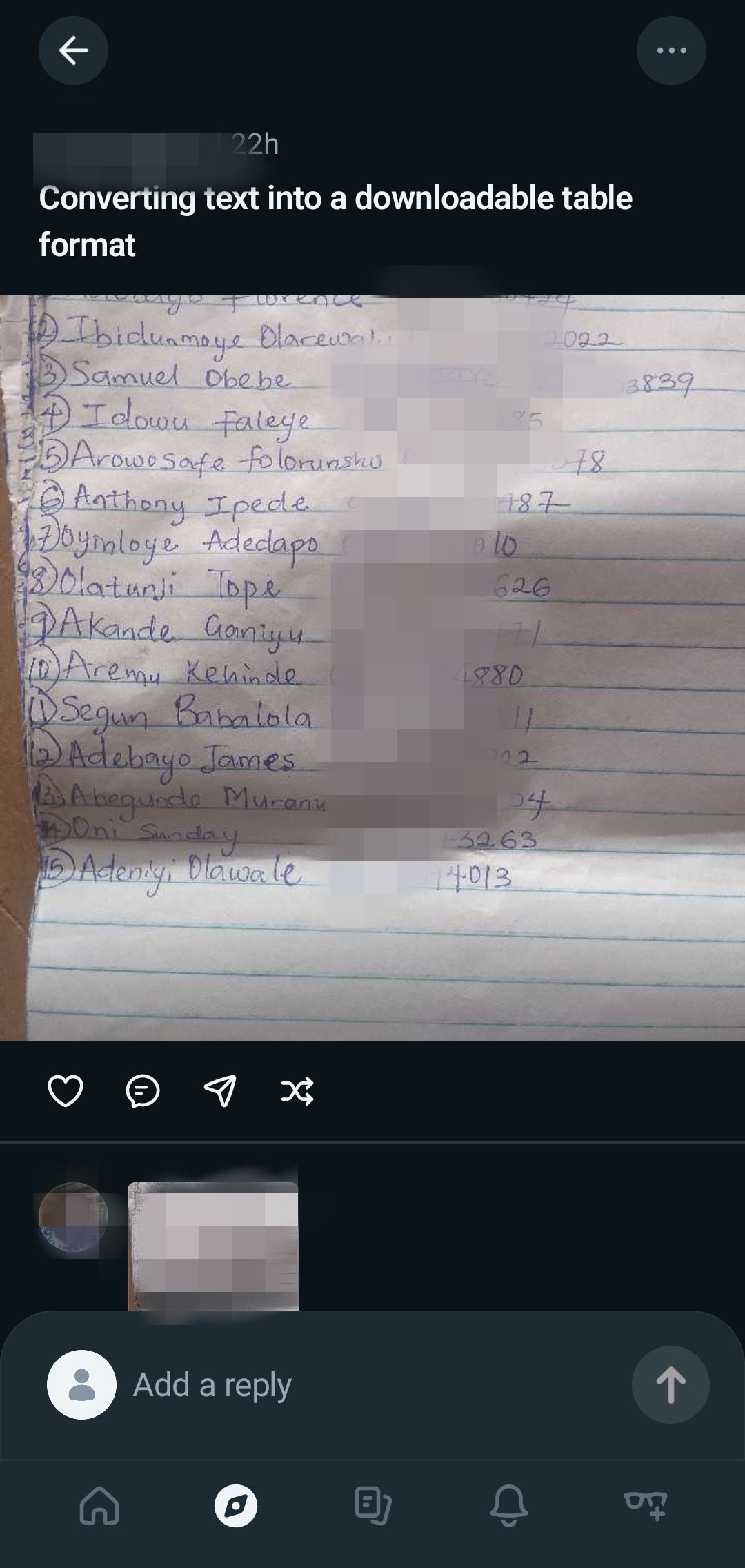

I’ve seen it firsthand, too. While browsing the Meta AI app, I came across students uploading homework assignments and asking the AI to help them with the answers. In another case, someone pasted a list of names with phone numbers into the chat and asked Meta AI to format them into text. It’s hard to believe any of these users meant to broadcast that information—it just shows how easy it is to misstep. There are also some prompts with some more unsavory focuses.

Even if you understand the share function, it’s still easy to post something you meant to keep private. And the worst part? You might not realize it until your prompt shows up in someone else’s feed.

Why This Matters (Even If You Think It Doesn’t)

It’s easy to shrug this off. “Who cares if someone sees my silly AI prompt?” But the implications go beyond embarrassment.

Imagine asking Meta AI how to break a lease in your city, mentioning your landlord’s name, or uploading a photo to ask about a rash. Those details, when shared publicly, don’t just vanish into the digital void. They stick. They can be screen-captured, reposted, and traced back to you. If your Meta AI profile is tied to your public Instagram account, it might even expose your full name, your face, or your location.

That’s not just awkward—it’s a potential security risk. Experts have already noted examples of users unintentionally sharing court documents, medical history, or the names of minors. Trolls, scammers, or even future employers could come across posts that were never meant to be seen.

Related

6 Reasons I Love Meta AI—and 6 Bits I Hate

Meta AI is great, but it isn’t without issues.

If you’re using Meta AI and haven’t reviewed your privacy settings, you’re taking a risk. A single careless tap can expose your thoughts and questions to the world.

If you’re using the Meta AI app, it’s worth taking a minute to double-check your privacy settings. While chats are private by default, sharing them is far too easy.

- Open the Meta AI app.

- Tap your profile photo in the top-right corner.

- Scroll down and tap Data and Privacy.

- Tap Suggesting your prompts on other apps.

- Turn off the toggles for Facebook, Instagram.

- Return to the Data and Privacy screen and select Manage your information.

- Tap Make all your public prompts visible only to you and select Apply to all.

These changes take only a minute but can save you from accidentally broadcasting something you meant to keep private.

A Quick Rule of Thumb for Using AI Safely

We’ve previously shared guides on questions you should never ask an AI tool, as well as tasks to never outsource to AI. The tl;dr of which is: If you wouldn’t shout it in a crowded café, don’t type it into an AI.

No matter how trivial or casual a prompt might seem, if it includes anything you wouldn’t want a stranger—or your boss, your ex, or a journalist—to read, assume it could be seen.

Think of AI platforms less like private journals and more like open microphones. They’re useful, even friendly, but they’re not confidential. Even if you don’t hit “share,” anything you type might be stored, analyzed, or reused.

AI tools are convenient, but they’re still owned by companies with their own incentives. And user privacy is not typically near the top of the priority list, despite what most AI chatbot developers claim. So be smart, be skeptical, and above all, be careful.

Leave a Comment

Your email address will not be published. Required fields are marked *